import cv2

from matplotlib import pyplot as plt

import os

import glob

import numpy as np

def cv_show(name, img):

cv2.imshow(name, img)

cv2.waitKey(0)

cv2.destroyAllWindows()

def plt_show0(img):

b, g, r = cv2.split(img)

img = cv2.merge([r, g, b])

plt.imshow(img)

plt.show()

def plt_show(img):

plt.imshow(img, cmap='gray')

plt.show()

def read_directory(directory_name):

referImg_list = []

for filename in os.listdir(directory_name):

referImg_list.append(directory_name+"/"+filename)

return referImg_list

rawImage = cv2.imread('./img/car2.png')

plt_show0(rawImage)

image = cv2.GaussianBlur(rawImage,(3,3),0)

gray_image = cv2.cvtColor(image,cv2.COLOR_RGB2GRAY)

Sobel_y = cv2.Sobel(gray_image,cv2.CV_16S,1,0)

absY = cv2.convertScaleAbs(Sobel_y)

image = absY

ret,image = cv2.threshold(image,0,255,cv2.THRESH_OTSU)

kernelX = cv2.getStructuringElement(cv2.MORPH_RECT,(17,5))

image = cv2.morphologyEx(image,cv2.MORPH_CLOSE,kernelX,iterations = 1)

kernelX = cv2.getStructuringElement(cv2.MORPH_RECT,(17,1))

kernelY = cv2.getStructuringElement(cv2.MORPH_RECT,(1,13))

image = cv2.dilate(image,kernelX)

image = cv2.erode(image,kernelX)

image = cv2.erode(image,kernelY)

image = cv2.dilate(image,kernelY)

image = cv2.medianBlur(image,15)

contours,hierarchy = cv2.findContours(image,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

image1= rawImage.copy()

cv2.drawContours(image1,contours,-1,(0,0,255),5)

image2= rawImage.copy()

hsv = cv2.cvtColor(image2, cv2.COLOR_BGR2HSV)

lower_blue = (100, 43, 46)

upper_blue = (124, 255, 255)

lower_green = (35, 43, 46)

upper_green = (77, 255, 255)

mask_blue = cv2.inRange(hsv, lower_blue, upper_blue)

mask_green = cv2.inRange(hsv, lower_green, upper_green)

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5, 5))

mask_blue = cv2.morphologyEx(mask_blue, cv2.MORPH_OPEN, kernel)

mask_green = cv2.morphologyEx(mask_green, cv2.MORPH_OPEN, kernel)

mask = cv2.bitwise_or(mask_blue, mask_green)

filtered = cv2.bitwise_and(image2, image2, mask=mask)

plates = []

for contour in contours:

area = cv2.contourArea(contour)

x, y, w, h = cv2.boundingRect(contour)

ratio = w / h

if ratio < 2 or ratio > 6:

print('长宽不通过')

continue

rect_area = w * h

coverage = area / rect_area

if coverage < 0.5:

print('矩形和轮廓的覆盖率不通过')

continue

mask_area = cv2.countNonZero(mask[y:y+h, x:x+w])

mask_coverage = mask_area / rect_area

if mask_coverage < 0.5:

print('掩膜覆盖率不通过')

continue

plates.append((x, y, w, h))

if len(plates) == 0:

print("No plates found.")

elif len(plates) > 1:

print("Multiple plates found.")

else:

x, y, w, h = plates[0]

image4 = image2.copy()

cv2.rectangle(image2, (x, y), (x+w, y+h), (0, 255, 0), 1)

plt_show0(image2)

image3 = image4[y:y+h,x:x+w]

cv2.imwrite('./car_license/test1.png',image3)

license = cv2.imread('./car_license/test1.png')

licence_GB = cv2.GaussianBlur(license,(1,3),0)

licence_gray = cv2.cvtColor(licence_GB,cv2.COLOR_BGR2GRAY)

ret , img = cv2.threshold(licence_gray,0,255,cv2.THRESH_OTSU)

import numpy as np

mean = np.mean(img)

if mean > 128:

img = 255 - img

kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(2,1))

img_close = cv2.dilate(img,kernel)

number_contours , hierarchy = cv2.findContours(img_close,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

img1 = license.copy()

cv2.drawContours(img1,number_contours,-1,(0,255,0),1)

plt_show(img1)

chars = []

for cnt in number_contours:

x, y, w, h = cv2.boundingRect(cnt)

if w < 5 or h < 10 or w > 50 or h > 50:

continue

ratio = w / h

if ratio < 0.25 or ratio > 0.7:

continue

chars.append((x, y, w, h))

chars = sorted(chars, key=lambda x: x[0])

i = 0

for x, y, w, h in chars:

i = i+1

char = license[y:y+h, x:x+w]

char = cv2.resize(char, (64, 64))

cv2.imwrite('./words/test2_'+str(i)+'.png',char)

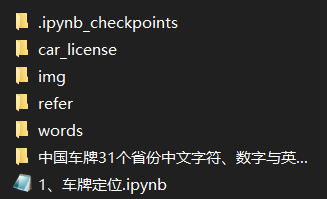

templates = ['0','1','2','3','4','5','6','7','8','9','A','B','C','D','E','F','G','H','J','K','L','M','N','P','Q','R','S','T','U','V','W','X','Y','Z','京','津','冀','晋','蒙','辽','吉','黑','沪','苏','浙','皖','闽','赣','鲁','豫','鄂','湘','粤','桂','琼','渝','川','贵','云','藏','陕','甘','青','宁','新']

folder_path = './words/'

image_format = '*.png'

images_path = glob.glob(folder_path + image_format)

def method_A(imageA):

c_words = []

for i in range(34, 64):

c_word = read_directory('./refer/' + templates[i])

c_words.append(c_word)

chinese_Gaussian = cv2.GaussianBlur(imageA, (3, 3), 0)

chinese_gray = cv2.cvtColor(chinese_Gaussian, cv2.COLOR_RGB2GRAY)

ret, chinese_threshold = cv2.threshold(chinese_gray, 0, 255, cv2.THRESH_OTSU)

mean = np.mean(chinese_threshold)

if mean > 100:

chinese_threshold = 255 - chinese_threshold

best_score = []

for c_word in c_words:

score = []

for word in c_word:

template_img = cv2.imdecode(np.fromfile(word, dtype=np.uint8), 1)

template_img = cv2.cvtColor(template_img, cv2.COLOR_RGB2GRAY)

ret, template_img = cv2.threshold(template_img, 0, 255, cv2.THRESH_OTSU)

height, width = template_img.shape

image_c = chinese_threshold.copy()

image_c = cv2.resize(image_c, (width, height))

result = cv2.matchTemplate(image_c, template_img, cv2.TM_CCOEFF)

score.append(result[0][0])

best_score.append(max(score))

best = best_score.index(max(best_score))

best_chinese = templates[34 + best]

print(best_chinese)

pass

def method_B(imageA):

E_words = []

for i in range(10, 34):

E_word = read_directory('./refer/' + templates[i])

E_words.append(E_word)

English_Gaussian = cv2.GaussianBlur(imageA, (3, 3), 0)

English_gray = cv2.cvtColor(English_Gaussian, cv2.COLOR_RGB2GRAY)

ret, English_threshold = cv2.threshold(English_gray, 0, 255, cv2.THRESH_OTSU)

mean = np.mean(English_threshold)

if mean > 100:

English_threshold = 255 - English_threshold

best_score = []

for E_word in E_words:

score = []

for word in E_word:

template_img = cv2.imdecode(np.fromfile(word, dtype=np.uint8), 1)

template_img = cv2.cvtColor(template_img, cv2.COLOR_RGB2GRAY)

ret, template_img = cv2.threshold(template_img, 0, 255, cv2.THRESH_OTSU)

height, width = template_img.shape

image_E = English_threshold.copy()

image_E = cv2.resize(image_E, (width, height))

result = cv2.matchTemplate(image_E, template_img, cv2.TM_CCOEFF)

score.append(result[0][0])

best_score.append(max(score))

best = best_score.index(max(best_score))

best_English = templates[10 + best]

print(best_English)

pass

def method_C(imageA):

E_words = []

for i in range(0, 34):

E_word = read_directory('./refer/' + templates[i])

E_words.append(E_word)

English_Gaussian = cv2.GaussianBlur(imageA, (3, 3), 0)

English_gray = cv2.cvtColor(English_Gaussian, cv2.COLOR_RGB2GRAY)

ret, English_threshold = cv2.threshold(English_gray, 0, 255, cv2.THRESH_OTSU)

mean = np.mean(English_threshold)

if mean > 100:

English_threshold = 255 - English_threshold

best_score = []

for E_word in E_words:

score = []

for word in E_word:

template_img = cv2.imdecode(np.fromfile(word, dtype=np.uint8), 1)

template_img = cv2.cvtColor(template_img, cv2.COLOR_RGB2GRAY)

ret, template_img = cv2.threshold(template_img, 0, 255, cv2.THRESH_OTSU)

height, width = template_img.shape

image_E = English_threshold.copy()

image_E = cv2.resize(image_E, (width, height))

result = cv2.matchTemplate(image_E, template_img, cv2.TM_CCOEFF)

score.append(result[0][0])

best_score.append(max(score))

best = best_score.index(max(best_score))

best_English = templates[0 + best]

print(best_English)

pass

count = 0

for image_path in images_path:

imageA = cv2.imread(image_path)

if count == 0:

method_A(imageA)

elif count == 1:

method_B(imageA)

else:

method_C(imageA)

count += 1

|